What Might Good AI Policy Look Like? Four Principles for a Light Touch Approach to Artificial Intelligence

So far, this month has seen the Biden administration issue a significant executive order and the US-UK Summit on artificial intelligence (AI) governance, as well as a growing number of bills in Congress. Much of the conversation around AI policy has been based on a presumption that this technology is inherently dangerous and in need of government intervention and regulation. Most notably, this is a major shift away from the more permission‐less approach that has allowed innovation and entrepreneurship in other technologies to flourish in the US, and towards the more regulatory and precautionary approach that has often stifled innovation in Europe.

So, what would a soft touch approach look like? I suggest that to embrace the same light touch approach that allowed the internet to flourish, policymakers should consider four key principles.

Principle 1: A Thorough Analysis of Existing Applicable Regulations with Consideration of Both Regulation and Deregulation

Underlying much of the conversation around potential AI is a presumption that AI will require new regulation. This is also a one‐sided view of regulation as it does not consider how existing regulation may also get in the way of beneficial AI applications.

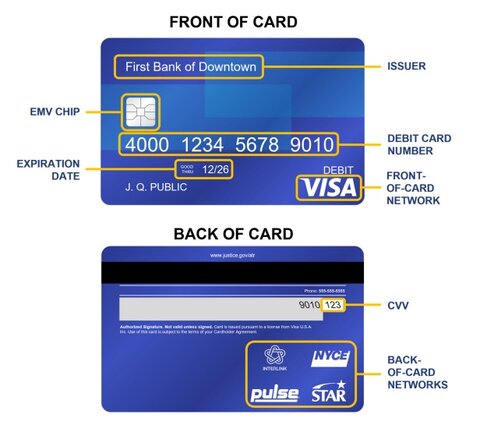

AI is a technology with many different applications. Like all technologies, it is ultimately a tool that can be used by individuals with a variety of intentions and purposes. For this reason, many of the anticipated harms, such as the potential use of AI for fraud or other malicious purposes, may be addressed by existing law that can be used to go after the bad actors using the technology for such purposes, and not the technology itself.

Some agencies, including the Consumer Financial Protection Bureau (CFPB), have already expressed such views. It is likely that many key concerns, including discrimination and fraud, are already addressed by existing law and such interpretations should be focused on the malicious actor, which in most cases will not be the technology itself.

After gaining a thorough understanding of what is already covered by regulation, the appropriate policymakers could identify what — if any — significant harms are unaddressed or where there is a need for clarity.

In accounting for the potential regulations that may impact AI, policymakers should consider not only those areas but look at both harms that remain unaddressed by current law, as well as the need for deregulation in some cases where existing regulations may get in the way. This may include opportunities for sandboxes in highly regulated areas such as healthcare and financial services where there are potentially beneficial applications but existing regulatory burdens make compliance impossible.

Principle 2: Prevent a Patchwork, Preemption of State and Local Laws

As with many areas of tech policy, in the absence of a federal framework, some state and local policymakers are instead choosing to pass their own laws. The 2023 legislative session saw at least 25 states consider legislation pertaining to AI. The nature of this legislation varied greatly, from working groups or studies to more formal potential regulatory regimes. While in some cases states may be able to act as laboratories of democracy, in other cases, such actions could create a patchwork that prevents the development of this important information.

With this in mind, a federal framework for artificial intelligence should consider including preemption at least in any areas addressed by the framework or otherwise necessary to prevent a disruptive patchwork.

While regulatory burdens of an AI patchwork could create problems, some areas of law are particularly reserved for the states, where it would be inappropriate to have federal control. In some cases, such as the use of AI in their own governments, state and local authorities may be better suited to those decisions and even able to show the potential benefits of embracing a technology. With this in mind, preemption should be tailored to preserve the traditional state role but prevent the type of burdens that would not allow the same technology to operate across the nation.

One example of what such a model of preemption may look like could come from the way states have sometimes preempted city actions to ban home‐sharing services like AirBnB while still allowing them their traditional regulatory role. Home‐sharing and other sharing economy services have often been a point of friction in certain cities. But when large cities in a state ban these services or engage in overregulation, it can have a disruptive effect on the ability of individuals across the state to use or offer these services and can undermine good state policy on allowing such forms of entrepreneurship.

This approach prevents cities from banning or creating regulations, such as a de facto ban on home‐sharing, but allows them to preserve their traditional role when it comes to concerns within their control, such as trash and noise ordinances.

A similar approach could be applied to potential preemption in the AI space, clarifying that states may not broadly regulate or ban the use of AI but may retain their ability to consider how and if they want to choose to use AI in their government entities or other traditional roles. Still, this should be done within the traditional scope of their role and not as a de facto regulation, such as banning the ability to contract with private sector entities if they are using AI or requiring actions that would result in being unable to have any product comply.

Principle 3: Education Over Regulation: Improved AI and Media Literacy

Many concerns arise about the potential for AI to manipulate consumers through misinformation and deep fakes, but these concerns are not new. We have seen in the past with technologies like photography and video that society develops norms and adapts to the way we find out the truth when faced with such concerns. Rather than try to dictate norms before they can organically evolve or stifle innovative applications with a top‐down approach, policymakers should embrace education over regulation as a way to empower consumers to make their own informed decisions and better understand new technologies.

Literacy curriculums evolve with technological developments, from the creation of the internet to social media platforms. Improved AI literacy — as well as media literacy more generally — could empower consumers to be more comfortable with the use of technology and make sound choices.

Industry, academia, and government actions towards increased AI literacy have provided students and adults alike with opportunities to increase their confidence around and in artificial intelligence tools. Initiatives like AI4K12, a joint project of the AAAI and CSTA in collaboration with the NSF, are already working to develop national guidelines for AI education in schools and an expansive community of innovators to develop a curriculum for a K‑12 audience.

In higher education, many universities have started to offer courses on ChatGPT, prompt engineering, basic literacy, and AI governance. Research projects like the Responsible AI for Social Empowerment and Education (RAISE) at MIT work with government and industry partners to deploy educational resources to students and adult learners on how to engage with AI responsibly and successfully. In a society that is increasing its reliance on technology, innovators in every sector are providing a multitude of avenues to familiarize users of all ages with innovations, like artificial intelligence.

Many states are utilizing this approach as they propose and pass social media literacy bills. While many states have a basic digital literacy requirement, a few states are putting forth proposals with a focus on social media literacy. Florida passed legislation earlier this year mandating a social media curriculum in grades six through twelve. Last month, California’s Governor Newsom signed AB 873, a bill that included social media as a part of required digital literacy teachings for all students K‑12.

Other states such as Missouri, Indiana, and New York are considering similar bills that would require education in social media usage. Such an approach could be expanded to include AI as well as social media. Policymakers should also consider ways this approach could be applied to reach adult populations—through public service campaigns or simple opportunities like providing links to more information from a range of existing civil society groups or through public‐private partnerships.

Principle 4: Consider the Government’s Position Towards AI and Protection of Civil Liberties

The government can impact the debate around artificial intelligence by restraining itself when appropriate to protect civil liberties, while also embracing a positive view of the technology in its own rhetoric and use.

Notably, during the Trump administration, an executive order was issued about the government’s use of AI that was designed to foster guardrails around key issues like privacy and civil liberties. It also encouraged agencies to embrace the technology in appropriate ways that could modernize and improve their responses. Further, it encouraged America’s development of this technology in the global marketplace.

However, the Biden administration seems to have shifted towards a much more precautionary view, focusing on the harms rather than the benefits of this technology.

Ideally, policymakers should look to see if there are appropriate barriers to using AI in government that can be removed to improve the services it provides to constituents. At the same time, much as with concerns over data, they should provide clear guardrails around its use in particular scenarios, such as law enforcement, and respond to concerns about the government’s access to data by considering data privacy not only in the context of AI but more generally.

Conclusion

Policymakers in the US decision during the 1990s to provide a presumption that the internet would be free unless regulation was proven necessary provided a great example of how a light touch approach can empower entrepreneurs, innovators, and users to experience a range of technological benefits that could not have been fully predicted. This light touch approach was critical to the United States flourishing while Europe and others stifled innovation and entrepreneurship with bureaucratic red tape.

As we encounter this new technological revolution with artificial intelligence, policymakers should not rush to actions based solely on their fears but instead look to the benefits of the approach of the previous era and consider how we might continue it with this new technology.